Synchrony & Alignment#

Written by Luke Chang and based on a tutorial on ISC

Synchrony between individuals happens at several levels from behavior to brain activity (Nummenmaa et al., 2018, Nastase et al., 2019). To an observer, synchrony during interaction or joint motion (Hale et al., 2020) can reflect prosocial qualities such rapport (Miles et al., 2009) or affiliation (Hove and Risen, 2009). During physically arousing ritualistic experiences, observers may selectively synchronize their heart rates while observing related, but not unrelated individuals participating in the ritual (Konvalinka et al., 2011) and the degree of affective synchrony can predict the perceived social connection between two individuals (Cheong et al., 2020). Synchrony of brain activity is associated with, among other things, shared psychological perspectives toward a stimulus (Lahnakoski et al., 2014, Yeshurun et al., 2017, Yeshurun et al., 2017, Kang & Wheatley, 2017, Cheong et al., 2020) and friendship (Parkinson et al., 2018), and may also be disturbed in psychiatric conditions ranging from developmental conditions such as autism (Hasson et al., 2009; Salmi et al., 2013, Byrge et al., 2015) to more acute conditions such as first-episode psychosis (Mäntylä et al., 2018). Thus, measures of synchrony can offer a simple window to many psychological processes.

In brain imaging, synchrony of brain activity is most commonly measured using intersubject correlations (ISC; Hasson et al., 2004). As the name implies, this method calculates linear correlations between participants and derives summary statistics from these correlations to measure the level of similarity of brain activity. Overall, the brain activity measured with fMRI during naturalistic stimulation conditions can be thought to consist of four main sources: (1) stimulus-driven brain activity between individuals shared by all or most of the participants, (2) individual or idiosyncratic activity elicited by the stimulus, (3) intrinsic activity that is not time-locked to the stimulus, and (4) noise from various sources. The idea behind ISC is to identify brain activity that is shared by a large proportion of the participants (category 1). Thus, this method evaluates how much of an individual’s brain activity is explained by this shared component. By contrast, if smaller groups of participants, e.g. pairs of friends within the study, share similar individual activity patterns (category 2), it may be better captured by the dyadic values in the pairwise matrices using techniques such as IS-RSA (Parkinson et al., 2018, Chen et al., 2020, Finn et al., 2020). Generally, the third category of activity is not readily detected by ISC, but can be revealed using alternative techniques that don’t necessarily assume time locking such as hidden markov models (Chang et al., 2021). Using inventive experimental designs, e.g. during verbal recall of previously experienced stimuli (Chen et al., 2017), it is still possible to extract shared brain activity patterns by temporally reorganizing the data, even when the original experiences of the participants were out of sync. The optimal choice of analysis will depend on the research question and the type of shared activity patterns that are of particular interest. Most commonly, ISCs are calculated locally within each voxel (or region), but this approach has also been extended to functional connectivity (Simony et al., 2016). Intersubject correlations give a summary statistic of synchrony over long periods of time, usually over an entire imaging session. However, the level of synchrony may change considerably from one moment to the next with the experimental condition and can be investigated using time-varying measures of synchrony.

In this tutorial, we will cover:

how to compute pairwise ISC

how to perform hypothesis tests with ISC data.org/features/notebooks/timecorr.html) tutorial)

how to perform dynamic ISC

how to apply isc to any feature space

how to apply isc to a low dimensional feature space

Computing Intersubject Correlation#

Pairwise vs Average Response#

Generally, ISCs are calculated using one of two main approaches. First, one calculates pairwise correlations between all participant pairs to build a full intersubject correlation matrix. The second approach, uses the average activity timecourse of the other participants as a model for each individual left out participant. This procedure produces individual, rather than pairwise, spatial maps of similarity reflecting how similarly, or “typically”, each person’s brain activates. These maps lend themselves to similar analyses as one might perform with first level results of a traditional general linear model (GLM) analysis. However, some of the individual variability is lost with the latter approach, and thus the ISC values are typically of much higher magnitude compared to the pairwise matrices.

Summary Statistic#

The next step after computing either the pairwise or average similarity across participants is to summarize the overall level of synchrony across participants. A straightforward approach is to compute the mean correlation. However, to make the correlation coefficients more normally distributed across the range of values, the Fisher’s Z transformation (inverse hyperbolic tangent) is typically applied before computing the mean correlation. The Fisher’s Z transformation mainly affects the higher end of absolute correlation values, eventually stretching the correlation coefficient 1 to infinity. However, with the typical range of values of pairwise ISCs, the effects of this transformation are relatively small reaching ~10% at the higher end of the scale of r=0.5. More recently, it has been suggested that computing the median, particularly when using the pairwise approach, provides a more accurate summary of the correlation values (Chen et al., 2016).

Hypothesis tests#

Performing hypothesis tests that appropriately account for the false positive rate can be tricky with ISC because of the dependence between the pairwise correlation values and the inflated number of variables in the pairwise correlation matrices. Though there have been proposals to use mixed-effects models for a parametric solution (Chen et al., 2017), we generally recommend using non-parametric statistics when evaluating the significance of these correlations.

There are two general non-parametric approaches to performing hypothesis tests with ISC. The first method is a permutation or randomization method, achieved by creating surrogate data and repeating the same analysis many times to build an empirical null distribution (e.g. 5-10k iterations). However, to meet the exchangeability assumption, it is important to consider the temporal dependence structure. Surrogate data can be created by circularly shifting the timecourses of the participants, or scrambling the phases of the Fourier transform of the signals and transforming these scrambled signals back to the time domain (Theiler et al., 1992, Lancaster et al., 2018). Various blockwise scrambling techniques have also been applied and autoregressive models have been proposed to create artificial data for statistical inference . These approaches have the benefit that, when properly designed, they retain important characteristics of the original signal, such as the frequency content and autocorrelation, while removing temporal synchrony in the data.

To illustrate these permutation based approaches, the animation below depicts the process of creating these null distributions and compares these to a similar distribution built based on real resting state data of the same duration in the same participants recorded just prior to the movie data in the same imaging study. Resting state is an ideal condition for demonstrating the true null distribution of no synchrony intersubject correlation as it involves no external synchronizing factors apart from the repeating noise of the scanner gradients, which are generally not of interest to us. Thus, any correlations in the resting state data arise by chance. As can be seen, the null distributions based on the surrogate data follow the distribution of resting state ISCs well as the number of iterations increases. However, the distributions are sometimes considered too liberal.

The second approach employs a subject-wise bootstrap on the pairwise similarity matrices. Essentially, participants are randomly sampled with replacement and then a new similarity matrix is computed with these resampled participants. As a consequence of the resampling procedure, sometimes the same subjects are sampled multiple times, which introduces correlation values of 1 off the diagonal. Summarizing the ISC using the median can minimize the impact of these outliers. These values are then shifted by the real summary statistics to produce an approximately zero-centered distribution. Python implementations in Brainiak and nltools convert these values to NaNs by default so that they are not included in the overall ISC summary statistic. If you would like to learn more about resampling methods, we encourage you to read our brief introduction available on the dartbrains course.

Getting Started#

Before getting started with this tutorial, we need to make sure you have the necessary software installed and data downloaded.

Software#

This tutorial requires the following Python packages to be installed. See the Software Installation tutorial for more information.

seaborn

matplotlib

numpy

scipy

pandas

nltools

nilearn

sklearn

datalad

Let’s now load the modules we will be using for this tutorial.

%matplotlib inline

import os

from tqdm.notebook import tqdm

import glob

import numpy as np

from numpy.fft import fft, ifft, fftfreq

import pandas as pd

import matplotlib.pyplot as plt

from matplotlib import gridspec

from matplotlib.animation import FuncAnimation

import seaborn as sns

from nltools.data import Brain_Data, Adjacency

from nltools.mask import expand_mask, roi_to_brain, create_sphere

from nltools.stats import (isc, isc_group, isfc, isps, fdr, threshold, phase_randomize, circle_shift, _butter_bandpass_filter, _phase_mean_angle, _phase_vector_length, zscore, align)

from nilearn.plotting import view_img_on_surf, view_img, plot_stat_map

from sklearn.metrics import pairwise_distances

from sklearn.utils import check_random_state

from scipy.stats import ttest_1samp, pearsonr

from scipy.signal import hilbert

from IPython.display import HTML

from fnl_tools.utils import rec_to_time

import datalad.api as dl

import warnings

warnings.simplefilter('ignore')

Data#

This tutorial will be using the Friday Night Lights dataset and will require downloading the Average ROI csv files.

We have already extracted the data for you to make this easier and have written out the average activity within each ROI into a separate csv file for each participant. If you would like to get practice doing this yourself, here is the code we used. Note that we are working with the hdf5 files as they load much faster than the nifti images, but either one will work for this example.

mask = Brain_Data('http://neurovault.org/media/images/2099/Neurosynth%20Parcellation_0.nii.gz')

file_list = glob.glob(os.path.join(download_path, 'ds003521', 'derivatives', 'denoised', 'smoothed', '*hdf5'))

for f in tqdm(file_list):

sub_dat = Brain_Data(f)

sub_roi = sub_dat.extract_roi(mask)

sub_roi = pd.DataFrame(sub_roi).T

output_name = f"{os.path.basename(f).split('_bold.hdf5')[0]}_average_roi.csv"

sub_roi.to_csv(os.path.join(download_path, 'ds003521', 'derivatives', 'denoised', 'smoothed', output_name), index=False)

For now, we will be downloading the csv files directly from github, but you should also be able to do this using datalad, once some of the OpenNeuro syncing has completed.

You will want to change data_dir to wherever you have installed the Friday Night Lights datalad repository. We will initialize a datalad dataset instance and get the files we need for this tutorial. If you’ve already downloaded everything, you can skip this cell. See the Download Data Tutorial for more information about how to install and use datalad.

Here is example code:

data_dir = '/Users/lukechang/Downloads/FNL'

# If dataset hasn't been installed, clone from GIN repository

if not os.path.exists(data_dir):

dl.clone(source='https://github.com/OpenNeuroDatasets/ds003521.git', path=data_dir)

# Initialize dataset

ds = dl.Dataset(data_dir)

# Get Cropped & Denoised CSV Files

result = ds.get(glob.glob(os.path.join(data_dir, 'derivatives', 'denoised', 'smoothed', f'*average_roi.csv')))

For this tutorial, you can also access the data directly from github.

sub_list = ['sub-sid000705',

'sub-sid000708',

'sub-sid000707',

'sub-sid000678',

'sub-sid000677',

'sub-sid000475',

'sub-sid000375',

'sub-sid000681',

'sub-sid000683',

'sub-sid000498',

'sub-sid000697',

'sub-sid000698',

'sub-sid000695',

'sub-sid000692',

'sub-sid000216',

'sub-sid000570',

'sub-sid000669',

'sub-sid000688',

'sub-sid000668',

'sub-sid000573',

'sub-sid000667',

'sub-sid000671',

'sub-sid000217',

'sub-sid000529',

'sub-sid000679',

'sub-sid000476',

'sub-sid000704',

'sub-sid000706',

'sub-sid000710',

'sub-sid000680',

'sub-sid000682',

'sub-sid000494',

'sub-sid000694',

'sub-sid000699']

sub_timeseries = {}

for sub in tqdm(sub_list):

sub_data = pd.read_csv(f'https://raw.githubusercontent.com/mind-tutorials/mind_book/master/data/fnl_average_roi/{sub}_task-movie_run-1_space-MNI152NLin2009cAsym_desc-preproc_trim_smooth6_denoised_average_roi.csv')

sub_timeseries[sub] = sub_data

data_dir = '/Users/lukechang/Downloads/FNL'

# If dataset hasn't been installed, clone from GIN repository

if not os.path.exists(data_dir):

dl.clone(source='https://github.com/OpenNeuroDatasets/ds003521.git', path=data_dir)

# Initialize dataset

ds = dl.Dataset(data_dir)

# Get Cropped & Denoised CSV Files

result = ds.get(glob.glob(os.path.join(data_dir, 'derivatives', 'denoised', 'smoothed', f'*average_roi.csv')))

# Load CSV files

file_list = glob.glob(os.path.join(data_dir, 'derivatives', 'denoised', 'smoothed', f'*average_roi.csv'))

sub_timeseries = {}

for f in tqdm(file_list):

sub = os.path.basename(f).split('_')[0]

sub_timeseries[sub] = pd.read_csv(f)

action summary:

get (notneeded: 34)

Pairwise Synchrony#

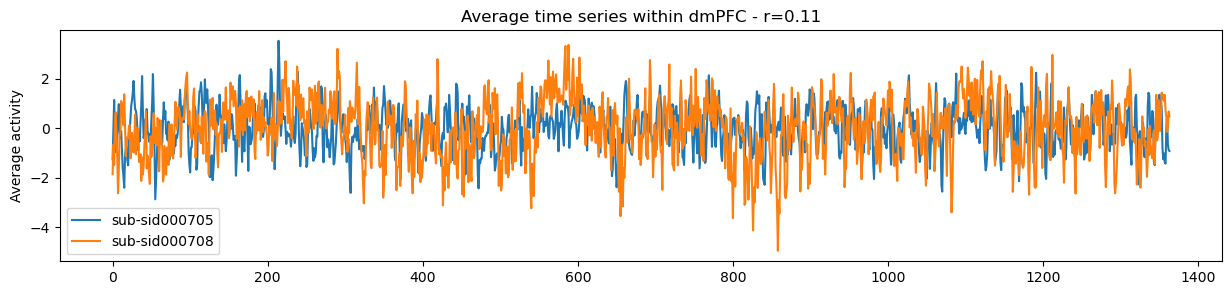

What if we were interested in examining if two subjects synchronized within their dmPFC while watching the movie?

There are many ways to test this, but a very straightforward approach is to simply correlate the timeseries of average activity within the dmPFC to the average activity from a different participant.

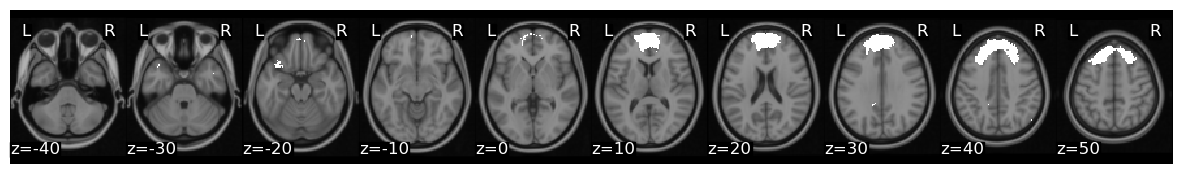

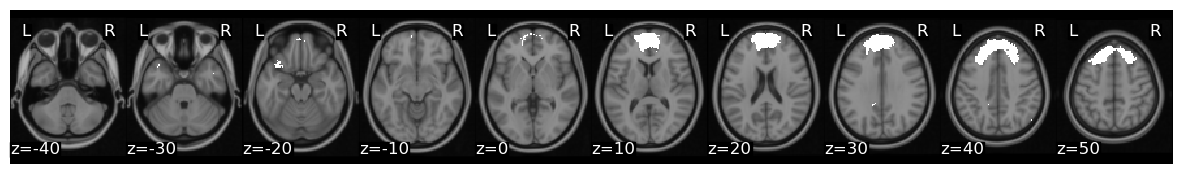

Ok, now let’s download the k=50 whole brain meta-analytic parcellation of the neurosynth database (de la Vega, 2016) from neurovault. Each ROI is indicated with a unique integer. We can expand this mask into 50 separate binary masks with the expand_mask function. The dmPFC corresponds to an integer index of 2.

mask = Brain_Data('http://neurovault.org/media/images/2099/Neurosynth%20Parcellation_0.nii.gz')

mask_x = expand_mask(mask)

mask.plot()

sub_1 = 'sub-sid000705'

sub_2 = 'sub-sid000708'

roi = 2

mask_x[roi].plot()

r, p = pearsonr(sub_timeseries[sub_1].iloc[:, roi], sub_timeseries[sub_2].iloc[:, roi])

f,a = plt.subplots(figsize=(15,3))

a.plot(sub_timeseries[sub_1].iloc[:, roi])

a.plot(sub_timeseries[sub_2].iloc[:, roi])

a.legend([sub_1, sub_2])

a.set_ylabel('Average activity')

a.set_title(f'Average time series within dmPFC - r={r:.02}')

Text(0.5, 1.0, 'Average time series within dmPFC - r=0.11')

As you can see this is very simple to assess overall synchrony. One thing that can be slightly more tricky is how to perform hypothesis tests to assess statistical significance. The p-values from the pearsonr scipy function assumes that each row of the data are independent, which is likely violated in this example as fMRI data tends to be autocorrelated. There are numerous ways to account for this using resampling statistics. We recommend using circle shifting or phase randomization, which are described in more detail below.

Intersubject correlation (ISC) Analysis#

Ok, now what if we were interested in assessing the overall level of synchrony across our entire sample? We can extend our pairwise synchrony concept to all participants and then summarizing the total pairwise synchrony using the mean or median.

let’s see how ISC works with real data. In this tutorial, we will be computing ISC on average activity within 50 ROIs using data from the Friday Night Lights dataset using the nltools Python toolbox. We also recommend checking out the Brainiak tutorial, and the Matlab ISC-Toolbox (Kauppi et al., 2014).

Now, let’s pick a single ROI to demonstrate how to perform ISC analyses. We can pick any region, but let’s continue with the dmPFC (roi=2), feel free to play with different regions as you work with the code.

We can plot the mask and create a new pandas DataFrame that has the average vmPFC activity for each participant.

roi = 2

mask_x[roi].plot()

def get_subject_roi(data, roi):

sub_rois = {}

for sub in data:

sub_rois[sub] = data[sub].iloc[:, roi]

return pd.DataFrame(sub_rois)

sub_rois = get_subject_roi(sub_timeseries, roi)

sub_rois.head()

| sub-sid000705 | sub-sid000708 | sub-sid000707 | sub-sid000678 | sub-sid000677 | sub-sid000475 | sub-sid000375 | sub-sid000681 | sub-sid000683 | sub-sid000498 | ... | sub-sid000679 | sub-sid000476 | sub-sid000704 | sub-sid000706 | sub-sid000710 | sub-sid000680 | sub-sid000682 | sub-sid000494 | sub-sid000694 | sub-sid000699 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | -1.257059 | -1.858981 | 0.055510 | -2.927054 | 0.001443 | 0.976214 | -0.091158 | 1.122817 | 0.531115 | -1.476508 | ... | -0.816042 | -1.364593 | 0.133366 | -2.057195 | 1.901717 | -0.849037 | -0.132114 | -4.361229e+00 | -0.863538 | -1.691733 |

| 1 | 0.025426 | -0.666974 | -0.661008 | -1.038320 | -1.200573 | 0.360406 | -0.165949 | -0.136538 | 0.619254 | -1.421292 | ... | -0.026740 | -2.155036 | 0.347466 | -2.337125 | 0.614552 | -0.656935 | -0.109674 | -1.787996e-12 | -0.656501 | -1.883794 |

| 2 | 1.147395 | -1.490594 | -1.106923 | -1.106748 | -0.171776 | 0.517466 | -0.011003 | 0.039086 | -0.711524 | -1.461454 | ... | 1.600135 | -2.700619 | 0.747714 | -1.796568 | 1.175404 | -0.381551 | 0.379087 | -3.886152e-12 | 0.714567 | -1.965230 |

| 3 | -0.312306 | 0.235479 | -0.832777 | -2.265747 | 2.064173 | 1.440031 | 0.581691 | 1.074168 | -0.916229 | -0.676008 | ... | 2.817513 | -2.574495 | 1.161320 | -1.159793 | 1.798948 | -0.109981 | 0.521651 | 3.098448e-12 | 0.582420 | -1.078426 |

| 4 | -0.960072 | 0.649027 | -0.094664 | -2.235001 | 1.333921 | 1.734485 | 0.790967 | 0.677707 | -0.524876 | 0.147219 | ... | 2.265140 | -0.002140 | 0.662223 | -0.648039 | 1.305884 | 0.865690 | -0.304318 | -5.992719e-13 | 0.707843 | -1.072950 |

5 rows × 34 columns

Circle Shift Randomization#

To perform ISC we will be using the nltools.stats.isc function. There are three different methods implemented to perform hypothesis tests (i.e., circular shifting data, phase randomization, and subject-wise bootstrap). We will walk through an example of how to run each one.

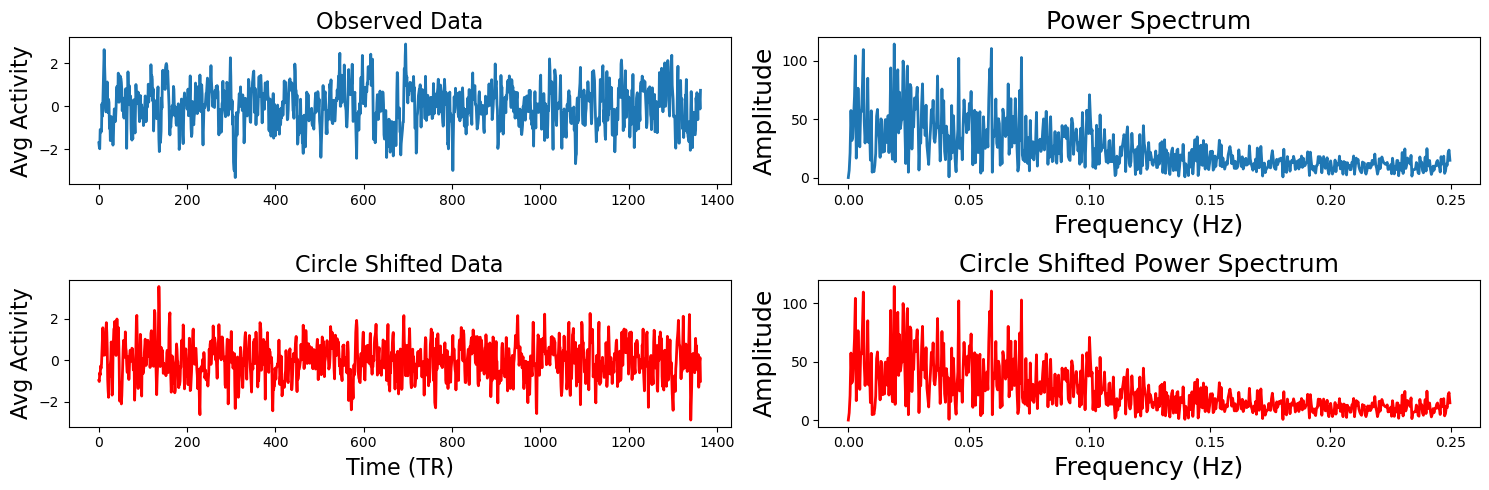

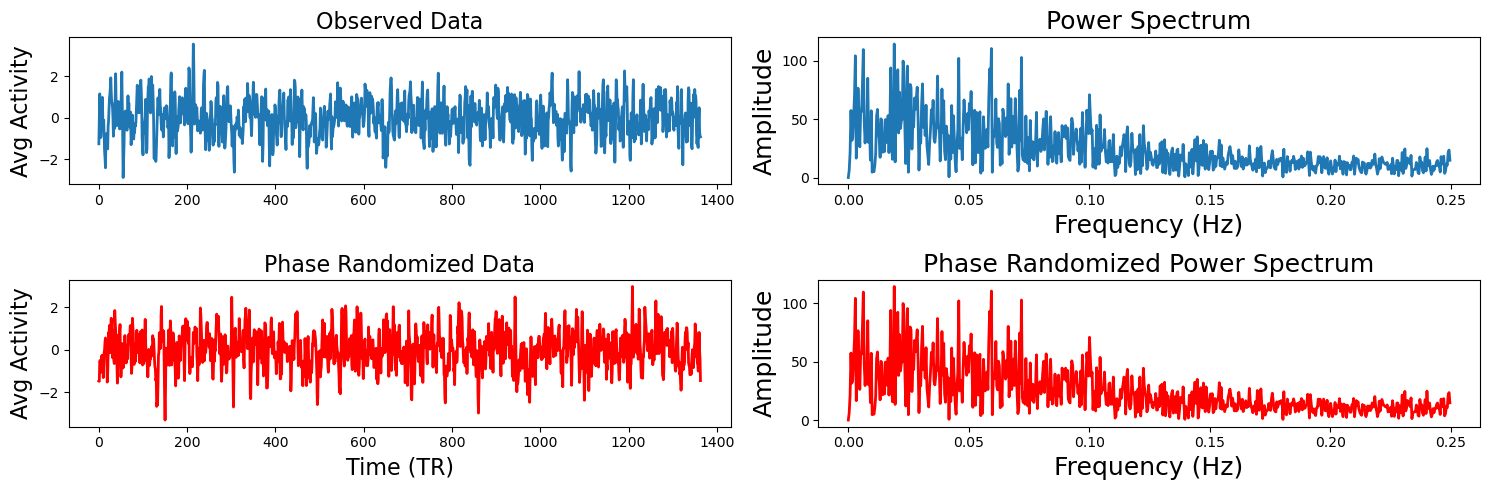

The idea behind circular shifting the data is to generate random surrogate data that has the same autoregressive and temporal properties of the original data (Lancaster et al., 2018). This is fairly straightforward and involves randomly selecting a time point to become the new beginning of the timeseries and then concatenating the rest of the data at the end so that it has the same length as the original data. Of course, there will potentially be a sudden change in the data where the two parts were merged.

To demonstrate how this works and that the circular shifted data has the same spectral properties of the original data, we will plot one subject’s time series and shift it using the nltools.stats.circle_shift function. Next to both timeseries we plot the coefficients from a fast fourier transform.

sub_1 = 'sub-sid000705'

sampling_freq = .5

f,a = plt.subplots(nrows=2, ncols=2, figsize=(15, 5))

a[0,0].plot(sub_rois[sub], linewidth=2)

a[0,0].set_ylabel('Avg Activity', fontsize=16)

a[0,1].set_xlabel('Time (TR)', fontsize=18)

a[0,0].set_title('Observed Data', fontsize=16)

fft_data = fft(sub_rois[sub_1])

freq = fftfreq(len(fft_data), 1/sampling_freq)

n_freq = int(np.floor(len(fft_data)/2))

a[0,1].plot(freq[:n_freq], np.abs(fft_data)[:n_freq], linewidth=2)

a[0,1].set_xlabel('Frequency (Hz)', fontsize=18)

a[0,1].set_ylabel('Amplitude', fontsize=18)

a[0,1].set_title('Power Spectrum', fontsize=18)

circle_shift_data = circle_shift(sub_rois[sub_1])

a[1,0].plot(circle_shift_data, linewidth=2, color='red')

a[1,0].set_ylabel('Avg Activity', fontsize=16)

a[1,0].set_xlabel('Time (TR)', fontsize=16)

a[1,0].set_title('Circle Shifted Data', fontsize=16)

fft_circle = fft(circle_shift_data)

a[1,1].plot(freq[:n_freq], np.abs(fft_circle)[:n_freq], linewidth=2, color='red')

a[1,1].set_xlabel('Frequency (Hz)', fontsize=18)

a[1,1].set_ylabel('Amplitude', fontsize=18)

a[1,1].set_title('Circle Shifted Power Spectrum', fontsize=18)

plt.tight_layout()

Now that you understand how the circular shifting method works with a single time series, let’s compute the ISC of the Friday Night Lights viewing data within the dmPFC roi with 5000 permutations. The function outputs a dictionary that contains the ISC values, the p-value, the 95% confidence intervals, and optionally returns the 5000 samples. All of the permutation and bootstraps are parallelized and will use as many cores as your computer has available.

stats_circle = isc(sub_rois, method='circle_shift', n_samples=5000, return_null=True)

print(f"ISC: {stats_circle['isc']:.02}, p = {stats_circle['p']:.03}")

ISC: 0.085, p = 0.0002

stats_circle.keys()

dict_keys(['isc', 'p', 'ci', 'null_distribution'])

Phase Randomization#

Next we will show how phase randomization works. This method projects the data into frequency space using a fast fourier transform and randomizes the phase and then projects the data back into the time domain (Theiler et al., 1992, Lancaster et al., 2018). If you would like to learn more about Fourier transforms, check out our tutorial on Dartbrains. Similar to the circular shifting method, this generates a random surrogate of the data, while maintaining a similar temporal and autoregressive structure as the original data. We will generate the same plots from the example above to illustrate how this works using the nltools.stats.phase_randomize function.

sub_1 = 'sub-sid000705'

sampling_freq = .5

f,a = plt.subplots(nrows=2, ncols=2, figsize=(15, 5))

a[0,0].plot(sub_rois[sub_1], linewidth=2)

a[0,0].set_ylabel('Avg Activity', fontsize=16)

a[0,1].set_xlabel('Time (TR)', fontsize=18)

a[0,0].set_title('Observed Data', fontsize=16)

fft_data = fft(sub_rois[sub_1])

freq = fftfreq(len(fft_data), 1/sampling_freq)

n_freq = int(np.floor(len(fft_data)/2))

a[0,1].plot(freq[:n_freq], np.abs(fft_data)[:n_freq], linewidth=2)

a[0,1].set_xlabel('Frequency (Hz)', fontsize=18)

a[0,1].set_ylabel('Amplitude', fontsize=18)

a[0,1].set_title('Power Spectrum', fontsize=18)

phase_random_data = phase_randomize(sub_rois[sub_1])

a[1,0].plot(phase_random_data, linewidth=2, color='red')

a[1,0].set_ylabel('Avg Activity', fontsize=16)

a[1,0].set_xlabel('Time (TR)', fontsize=16)

a[1,0].set_title('Phase Randomized Data', fontsize=16)

fft_phase = fft(phase_random_data)

a[1,1].plot(freq[:n_freq], np.abs(fft_phase)[:n_freq], linewidth=2, color='red')

a[1,1].set_xlabel('Frequency (Hz)', fontsize=18)

a[1,1].set_ylabel('Amplitude', fontsize=18)

a[1,1].set_title('Phase Randomized Power Spectrum', fontsize=18)

plt.tight_layout()

stats_phase = isc(sub_rois, method='phase_randomize', n_samples=5000, return_null=True)

print(f"ISC: {stats_phase['isc']:.02}, p = {stats_phase['p']:.03}")

ISC: 0.085, p = 0.0002

As you can see the ISC value is identical as the median of the pairwise correlations are identical. The p-values are also similar and likely reflect the limited precision of the possible p-values that can be computed using only 5,000 permutations. For greater precision, you will need to increase the number of permutations, but remember that this will also increase the computational time.

Subject-wise Bootstrapping#

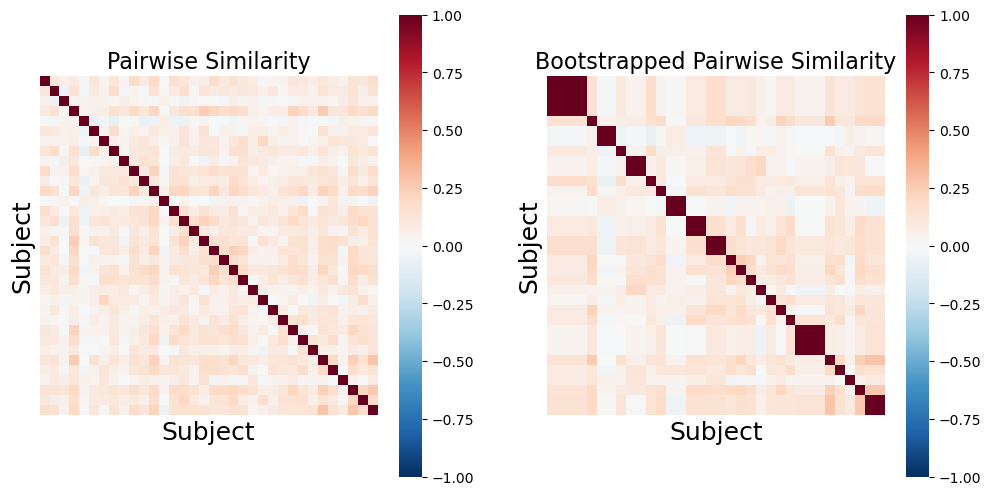

The final approach we will illustrate is subject-wise bootstrapping of the pairwise similarity matrix. This approach is more conservative than the previously described methods and is almost an order of magnitude faster shuffling the similarity matrix rather than recomputing the pairwise similarity for the null distribution (Chen et al., 2016). Bootstrapping and permutation tests are different types of resampling statistics (see our resampling tutorial for a more in depth overview). Bootstrapping is typically used more for generating confidence intervals around an estimator, while permutation tests are used for performing hypothesis tests. However, p-values can also be computed using a bootstrap by subtracting the ISC from the null distribution and evaluating the percent of samples from the distribution that are smaller than the observed ISC (Hall et al., 1991).

Just like the examples above, we will illustrate what an example bootstrapped similarity matrix looks like. Notice how some subjects are repeatedly resampled, which means that there are multiple values of perfect correlations found off the diagonal. This should be accounted for by using the median summary statistic of the lower triangle. However, both Brainiak and nltools toolboxes convert these values to NaNs to minimize the impact of these outliers on the summary statistic.

def bootstrap_subject_matrix(similarity_matrix, random_state=None):

'''This function shuffles subjects within a similarity matrix based on recommendation by Chen et al., 2016'''

random_state = check_random_state(random_state)

n_sub = similarity_matrix.shape[0]

bootstrap_subject = sorted(random_state.choice(np.arange(n_sub), size=n_sub, replace=True))

return similarity_matrix[bootstrap_subject, :][:, bootstrap_subject]

similarity = 1 - pairwise_distances(pd.DataFrame(sub_rois).T, metric='correlation')

f,a = plt.subplots(ncols=2, figsize=(12, 6), sharey=True)

sns.heatmap(similarity, square=True, cmap='RdBu_r', vmin=-1, vmax=1, xticklabels=False, yticklabels=False, ax=a[0])

a[0].set_ylabel('Subject', fontsize=18)

a[0].set_xlabel('Subject', fontsize=18)

a[0].set_title('Pairwise Similarity', fontsize=16)

sns.heatmap(bootstrap_subject_matrix(similarity), square=True, cmap='RdBu_r', vmin=-1, vmax=1, xticklabels=False, yticklabels=False, ax=a[1])

a[1].set_ylabel('Subject', fontsize=18)

a[1].set_xlabel('Subject', fontsize=18)

a[1].set_title('Bootstrapped Pairwise Similarity', fontsize=16)

Text(0.5, 1.0, 'Bootstrapped Pairwise Similarity')

stats_boot = isc(sub_rois, method='bootstrap', n_samples=5000, return_null=True)

print(f"ISC: {stats_boot['isc']:.02}, p = {stats_boot['p']:.03}")

ISC: 0.085, p = 0.0002

The bootstrap procedure tends to run much faster than the permutation methods on our computer, which is one of the reasons that the authors of the toolbox recommend this approach, beyond it being more conservative (Chen et al., 2016).

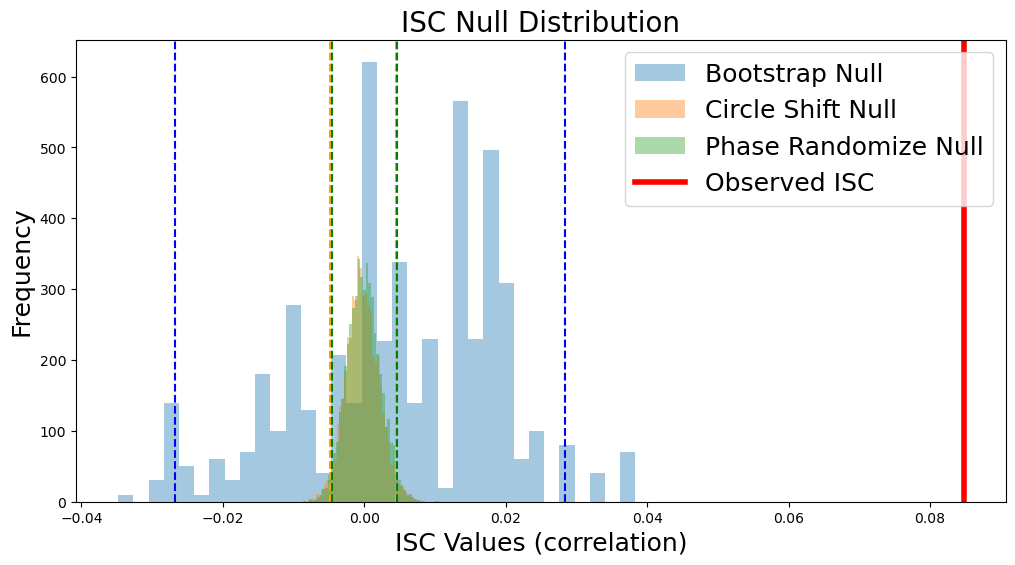

Null Distributions#

Since each of our examples saved the null distribution, we can plot a histogram of the null distribution from each method including the confidence intervals. Notice that the circle shift and phase randomization methods produce highly similar null distributions and confidence intervals, while the bootstrap method has a wider and less symmetric distribution with the current number of iterations. However, the observed ISC of 0.085 (red line) exceeds all of the samples from the null distribution yielding a very small p-value. Notice that our observed null distributions using the surrogate data derived from the Sherlock data are very similar to the animation of null data presented above.

plt.figure(figsize=(12,6))

sns.distplot(stats_boot['null_distribution'] - stats_boot['isc'], kde=False, label='Bootstrap')

sns.distplot(stats_circle['null_distribution'], kde=False, label='Bootstrap')

sns.distplot(stats_phase['null_distribution'], kde=False, label='Bootstrap')

plt.ylabel('Frequency', fontsize=18)

plt.xlabel('ISC Values (correlation)', fontsize=18)

plt.title('ISC Null Distribution', fontsize=20)

plt.axvline(stats_boot['isc'], linestyle='-', color='red', linewidth=4)

plt.legend(['Bootstrap Null','Circle Shift Null', 'Phase Randomize Null','Observed ISC'], fontsize=18)

plt.axvline(stats_boot['ci'][0] - stats_boot['isc'], linestyle='--', color='blue')

plt.axvline(stats_boot['ci'][1] - stats_boot['isc'], linestyle='--', color='blue')

plt.axvline(stats_circle['ci'][0], linestyle='--', color='orange')

plt.axvline(stats_circle['ci'][1], linestyle='--', color='orange')

plt.axvline(stats_phase['ci'][0], linestyle='--', color='green')

plt.axvline(stats_phase['ci'][1], linestyle='--', color='green')

<matplotlib.lines.Line2D at 0x16c7b3e20>

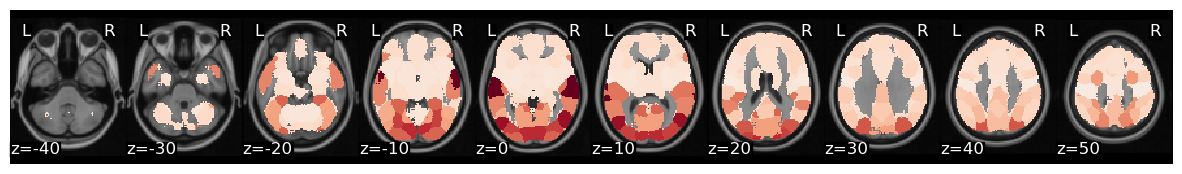

Whole brain ISC#

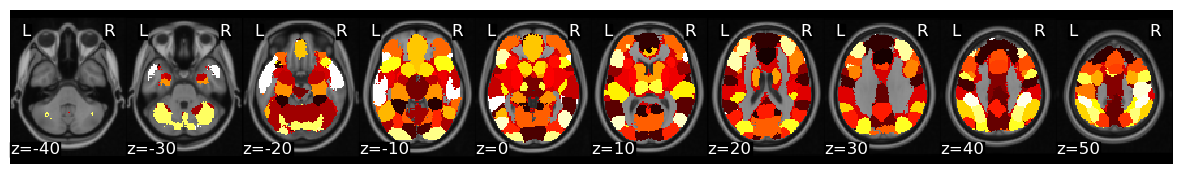

Now, let’s calculate ISC looping over each of the 50 ROIs from the whole-brain meta-analytic parcellation (de la Vega, 2016). Here we loop over each ROI and grab the column from each subject’s dataframe. We then run ISC on the combined subject’s ROI timeseries using the median method and compute a hypothesis test using the subject-wise bootstrap method with 5000 samples (Chen et al., 2016). Finally, we convert each correlation and p-value from each region back into a Brain_Data instance.

isc_r, isc_p = {}, {}

for roi in tqdm(range(len(mask_x))):

stats = isc(get_subject_roi(sub_timeseries, roi), n_samples=5000, metric='median', method='bootstrap')

isc_r[roi], isc_p[roi] = stats['isc'], stats['p']

isc_r_brain, isc_p_brain = roi_to_brain(pd.Series(isc_r), mask_x), roi_to_brain(pd.Series(isc_p), mask_x)

Now we can plot the ISC values to visualize which regions had a higher overall ISC.

isc_r_brain.plot(cmap='RdBu_r')

view_img(isc_r_brain.to_nifti())

We can threshold using bonferroni correction (p < 0.001 for k=50 parcellation). Alternatively, we can threshold using false discovery rate, by setting thr=fdr(isc_p_brain.data). In this example, FDR is more conservative than bonferroni.

view_img_on_surf(threshold(isc_r_brain, isc_p_brain, thr=.001).to_nifti())

Temporal dynamics of intersubject synchrony#

The majority of research using fMRI has focused on correlations calculated over entire datasets to reveal the average connectivity or intersubject similarity in a group of participants. However, functional brain networks are widely thought to be dynamic. Thus, it is not clear whether tools like correlation, which assumes a constant statistical dependence between the variables over the entire imaging session, are the most appropriate way to analyze data gathered during complex naturalistic stimulation.

A simple, and popular way of looking at temporal variability of synchrony while limiting the effects of signal amplitudes is to calculate correlations within sliding time windows. This allows the estimation of synchrony also during time windows when the signals are close to their mean values as the amplitude within each time window is standardized when the correlation is calculated. However, the length of the temporal window forces a trade-off between temporal accuracy and stability of the correlation coefficient calculated in that window. Very short time windows allow one to follow precisely when correlations occur, but short windows yield extremely unstable correlations, which can be dominated completely by e.g. single co-occurring spikes or slopes. Thus, sliding-window correlations in short time-windows are often characterized by extreme correlation values that change signs wildly.

Calculating the phase synchronization or phase locking of signals is one option to estimate time-varying synchronization of signals in a way that largely separates synchronization from signal amplitudes. It has been used widely for electrophysiological measures such as EEG and MEG, and more recently also for fMRI (Glerean et al., 2012). Phase synchronization leverages the Hilbert transform to transform the real-valued signals into a complex valued, analytic signal, which is a generalization of the phasor notation of sinusoidal signals that are used widely in engineering applications.

For illustration, two examples of analytic signals with constant frequency and amplitude are shown in the bottom panel of the animation, plotted in three dimensions (real, imaginary and time axes). We have used the cosine of the angular difference as a measure of pairwise synchrony. This produces time-averaged values that are consistent with the ISCs in the regions. In contrast to a time-invariant phasor, an analytic signal has a time-varying amplitude envelope and frequency and can thus be used to track changes in synchrony over time. However, for meaningful separation of the envelope and phase of the signal, the original signal has to be contained in a limited frequency band, which can be obtained through band-pass filtering. The smaller this frequency band is, the better the amplitude envelope is separated into a lower frequency than the phase of the signal in the pass-band (Glerean et al., 2012). However, poorly designed filters may affect the shape of the signal considerably and in extreme cases even remove the signal of interest. For example, some filters can cause non-linear phase shifts across the frequency spectrum, or excessively tight pass-band may miss important frequencies completely.

Compared to sliding-window correlations, phase synchronization has the benefit that no explicit time windowing is required and synchronization is estimated at the original sampling frequency of the signals (though you still do need to choose a specific narrow frequency band). However, in a single pairwise comparison, phase synchrony can get extreme values by chance, much like a stopped clock that shows the right time twice a day. This is illustrated in the bottom panel of the animation. Despite the two signals being independent, the histogram on the left shows that many of the time points have extreme synchrony values. Accordingly, the estimate of mean synchrony oscillates with the phase of the signals, until eventually stabilizing around zero as expected for independent signals. Thus, phase synchrony of two signals has the potential of producing extreme synchrony values much like time windowed correlations. This can be mitigated by averaging. Averaging over the timepoints of a full session, intersubject phase synchronization (ISPS) of regional activity produces highly similar group-level results to ISC. However, this removes the benefit of the temporal accuracy of ISPS and is thus of limited use. By contrast, averaging over (pairs of) subjects improves the reliability of synchrony in a larger population while retaining the temporal accuracy.

Intersubject Phase Synchrony#

Now, let’s start working through some code to build an intuition for the core concepts behind intersubject phase synchrony (ISPS). We will begin by creating an animation of the phase angles.

First, we need to compute the instantaneous phase angle of our average ROI activity for each subject. We will use an infinite impulse response (IIR) bandpass butterworth filter. This requires specifying the sampling_frequency in cycles per second (i.e., Hz), which is \(\frac{1}{tr}\), and lower and upper cutoff frequencies also in Hz. We will then apply a hilbert transform and extract the phase angle for each time point.

Let’s extract signal from primary auditory cortex, which we can assume will synchronize strongly across participants (roi=35) and use a lower bound cutoff frequency of 0.04Hz and an upper bound of 0.07Hz as recommended by (Glerean et al., 2012).

To visualize the data, we will plot 100 TRs of each participant’s phase angles from the auditory cortex using a polar plot. Notice that for some time points all subjects have a different phase angle. These time points should have low synchrony values (i.e., resultant vector length close to zero). Other time points the phase angles will cluster together and all face the same direction. These time points will have high phase synchrony (i.e., resultant vector length close to one). We can also compute the mean phase angle for the group using circular statistics (red line). We don’t actually care so much about the mean angle, but rather the length of the resultant vector. The resultant vector length is our metric of intersubject phase clustering, or the degree to which participants are in phase with each other at a given time point. Notice how the length gets shorter the more participants are out of phase and longer when they are all facing the same direction.

roi = 2

tr = 2

lowcut = .04

highcut = .07

phase_angles = np.angle(hilbert(_butter_bandpass_filter(get_subject_roi(sub_timeseries, roi), lowcut, highcut, 1/tr), axis=0))

xs, ys = [], []

fig = plt.figure(constrained_layout=False, figsize=(10,10))

spec = gridspec.GridSpec(ncols=4, nrows=4, figure=fig)

a0 = fig.add_subplot(spec[:2, :2], projection='polar')

plt.polar([0, _phase_mean_angle(phase_angles[0,:])], [0,1], color='red', linewidth=3)

a1 = fig.add_subplot(spec[:2, 2:4], projection='polar')

plt.polar([0, phase_angles[0,0]], [0,1], color='navy', linewidth=1, alpha=.2)

a2 = fig.add_subplot(spec[2:, :])

a2.plot(_phase_vector_length(phase_angles))

a2.set_ylabel('Phase Synchrony', fontsize=18)

a2.set_xlabel('Time (TRs)', fontsize=18)

def animate(i, xs, ys):

xs = np.linspace(0, i, i+1, endpoint=True)

ys = phase_angles[:i+1, :]

a0.clear()

a0.plot([0, _phase_mean_angle(phase_angles[i,:])], [0, _phase_vector_length(phase_angles[i,:])], color='red', linewidth=3)

a0.set_title('Mean Phase Angle', fontsize=18)

a0.set_ylim([0,1])

a1.clear()

for j in range(ys.shape[1]):

a1.plot([0, phase_angles[i,j]], [0,1], color='navy', alpha=.5)

a1.set_title('Subject Phase Angles', fontsize=18)

a2.clear()

a2.plot(xs, _phase_vector_length(ys))

a2.set_ylim([0,1])

a2.set_ylabel('Resultant Vector Length', fontsize=18)

a2.set_xlabel('Time (TRs)', fontsize=18)

a2.set_title('Intersubject Phase Synchrony', fontsize=18)

plt.tight_layout()

animation = FuncAnimation(fig, animate, fargs=(xs, ys), frames=range(150,200), interval=150, blit=False)

plt.close(animation._fig)

HTML(animation.to_jshtml())